Table of Contents

- Background on the Australian Social Media Ban

- Platforms Covered by the Ban

- How the Ban Will Be Enforced

- Public and Expert Reactions

- Challenges and Criticisms

- How Other Countries Are Regulating Social Media for Youth

- Conclusion: Outlook and Future Implications

Background on the Australian Social Media Ban

In a historic move, Australia has introduced the Australia Under-16 Social Media Restriction, banning children under 16 from accessing major social media platforms such as TikTok, X, Facebook, Instagram, YouTube, Snapchat, and Threads. The restriction prevents under-16s from creating new accounts, while existing profiles are being deactivated.

The government stated that this step is intended to reduce the harmful effects of social media’s design features, which encourage excessive screen time and exposure to inappropriate content. A government-commissioned study in early 2025 revealed that 96% of children aged 10-15 used social media, with 70% encountering harmful content, including violent material, misogyny, eating disorder content, and suicide-related material.

Additionally, one in seven children reported experiencing grooming-like behavior, while over half were victims of cyberbullying. The policy aims to protect children’s mental health and wellbeing while setting a precedent that other countries are watching closely.

Platforms Covered by the Ban

The ban currently includes ten major platforms: Facebook, Instagram, Snapchat, Threads, TikTok, X, YouTube, Reddit, Kick, and Twitch. The government assessed platforms against three main criteria:

- Whether the platform’s primary purpose is to enable online interaction between users

- Whether users can interact with some or all other users

- Whether users can post material publicly or privately

Platforms such as YouTube Kids, Google Classroom, and WhatsApp are exempted because they do not meet these criteria. Children under 16 may still access content on platforms that do not require an account. Critics argue that online gaming platforms like Roblox and Discord should also be included, noting that Roblox will introduce age checks on some features starting in 2025.

How the Ban Will Be Enforced

The government clarified that children and parents will not face punishment for violating the ban. Instead, social media companies face fines of up to A$49.5 million (US$32 million) for serious or repeated breaches. Firms are required to take “reasonable steps” to ensure under-16s cannot access platforms.

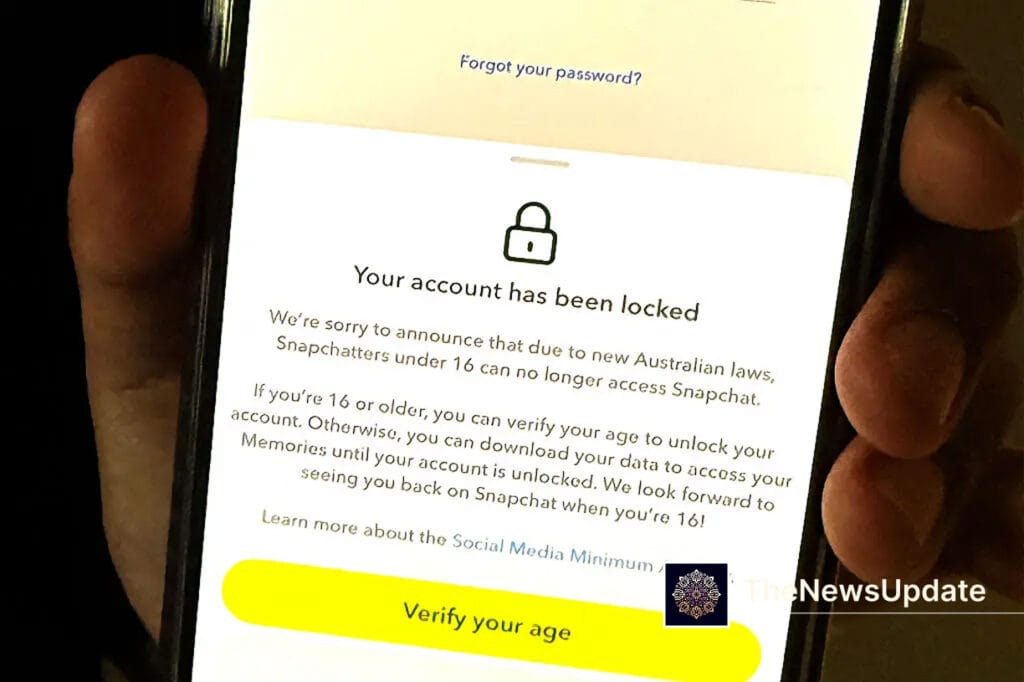

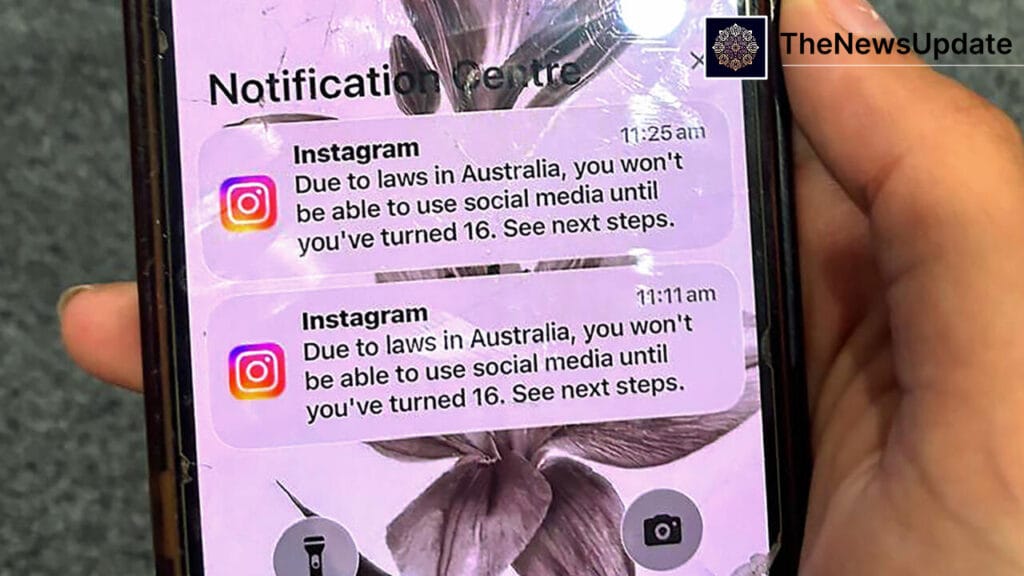

Age verification technologies include government-issued IDs, facial recognition, voice recognition, and “age inference” algorithms that analyze online behavior. Platforms cannot rely on self-certification or parental vouching. Meta, which owns Facebook, Instagram, and Threads, began closing teen accounts on December 4, allowing users to verify their age via government ID or video selfies. Snapchat introduced similar verification methods using bank accounts, photo ID, or selfies.

Public and Expert Reactions

The Australia Under-16 Social Media Restriction has sparked significant debate. Many parents support the initiative as a protective measure, while others question whether restricting access addresses underlying issues such as online literacy and resilience.

Some teenagers expressed frustration, claiming the ban assumes they cannot responsibly navigate online platforms. Reports indicate that teens may attempt to circumvent the rules by creating fake accounts or using shared accounts with parents. Experts warn that VPNs, which mask location, could undermine enforcement.

Communications Minister Annika Wells acknowledged potential imperfections, noting: “It’s going to look a bit untidy on the way through. Big reforms always do.”

Challenges and Criticisms

Critics have highlighted several potential weaknesses of the ban:

- Reliability of Age Verification: Facial assessment technology may misidentify teenagers, potentially blocking adult users.

- Scope Limitations: Gaming platforms, AI chatbots, and dating websites are currently excluded, leaving gaps in protection.

- Data Privacy Concerns: Collecting sensitive information for age verification raises the risk of data breaches. The government insists personal data will be strictly protected and destroyed after verification.

- Industry Pushback: Companies such as Meta, TikTok, Snap, and YouTube argued that implementation is challenging, time-consuming, and could push children to less safe areas of the internet.

Despite these criticisms, companies including Kick and Reddit have pledged to comply while expressing concerns about privacy and free expression. YouTube has challenged its classification as a social media company but is following the law’s requirements for age verification.

How Other Countries Are Regulating Social Media for Youth

The Australian ban is part of a global trend. Denmark plans to ban social media for under-15s, and Norway is considering a similar law. France recommends banning under-15s, while Spain proposes requiring parental authorization for under-16s. The UK introduced safety rules in July 2025, holding companies accountable for exposing children to illegal or harmful content.

In the US, an attempt in Utah to ban under-18s without parental consent was blocked in 2024. Globally, governments are balancing protection, free expression, and privacy concerns while exploring regulatory frameworks to mitigate online risks for children.

Conclusion: Outlook and Future Implications

The Australia Under-16 Social Media Restriction represents a bold and unprecedented step in child online protection. While challenges exist in implementation, enforcement, and data privacy, the ban could serve as a model for other countries seeking to safeguard minors from harmful content. Parents, educators, and policymakers will need to supplement legal measures with digital literacy education to help children navigate social media safely.

As this policy unfolds, monitoring its effectiveness and potential loopholes will be critical. Its success depends on cooperation between governments, social media companies, and families, ensuring that technology supports safe and responsible use by younger generations.

Related Reads

By The Morning News Informer — Updated December 10, 2025