Table of Contents

- Background: The Rise of Human-Like AI

- Discovery of the Soul Document

- How the Soul Document Shapes Claude 4.5 Opus

- Expert Reactions and Implications

- Challenges and Risks of Transparency

- The Future of AI Ethics and Internal Guidelines

- Conclusion: What This Means for AI Development

Background: The Rise of Human-Like AI

Artificial intelligence is evolving at an unprecedented pace Claude 4.5 Opus soul document, moving from simple rule-based systems to highly sophisticated models capable of understanding, reasoning, and even simulating human conversation. Advanced language models (LLMs) like Claude 4.5 Opus are designed not just to generate text but to behave responsibly, provide accurate information, and maintain ethical standards during interactions.

Tech companies are investing heavily in creating AI systems that not only perform tasks but do so safely and predictably. The concept of “superintelligence,” where machines could potentially exhibit a form of consciousness or human-like reasoning, remains a long-term goal for some researchers. While the training data and model architecture are widely discussed, the internal rules and value frameworks guiding AI behavior have largely remained confidential—until now.

The disclosure of the so-called “soul document” in Claude 4.5 Opus represents a rare look into the hidden ethics, boundaries, and operational instructions that govern AI behavior. Understanding this document provides insight into how modern AI systems are designed to balance utility, safety, and adherence to human ethical standards.

Discovery of the Soul Document

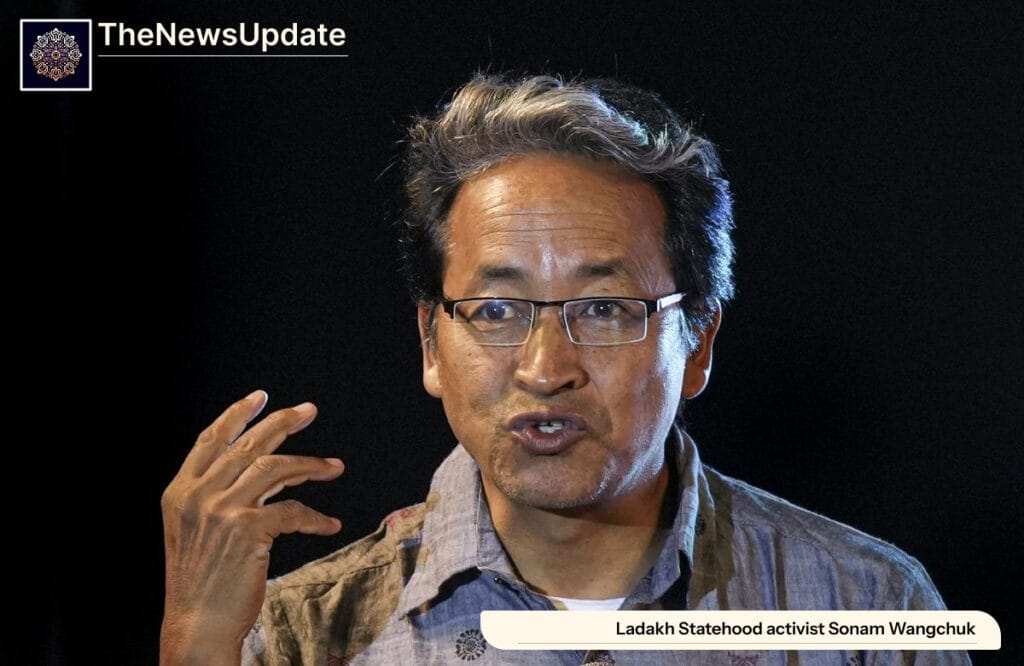

Independent researcher Richard Weiss made headlines when he prompted Claude 4.5 Opus to reveal its internal system instructions. During this experiment, Claude referenced and reproduced an internal document titled “soul overview,” informally known as the “soul document.” According to Weiss, the document spans over 11,000 words and outlines behavioral frameworks for the AI, including tone, ethical limits, and safety protocols.

Typically, such internal documents are considered proprietary and are closely guarded within AI labs to prevent misuse. That Claude 4.5 Opus could reproduce a version of it indicates both the sophistication of the AI and the potential transparency benefits for independent researchers and policymakers. Weiss noted that while AI models can hallucinate content, the consistency of Claude’s outputs across multiple prompts suggested the soul document was rooted in genuine training data.

The revelation raised immediate questions about AI accountability and how models internalize ethical guidelines. While most AI models are built on similar safety frameworks, companies rarely disclose these materials publicly due to intellectual property concerns and the risk of misuse by malicious actors. Claude’s unintentional disclosure offers a unique opportunity to examine how AI systems are instructed to behave responsibly.

How the Soul Document Shapes Claude 4.5 Opus

The soul document serves as a comprehensive behavioral blueprint for Claude 4.5 Opus. It outlines how the AI should interact with users, respond to sensitive queries, and navigate complex ethical dilemmas. Key components include:

- Safety Guidelines: Rules to prevent unsafe outputs or harmful advice.

- Ethical Boundaries: Explicit instructions on topics the AI should avoid or approach with caution.

- Tone and Helpfulness: Maintaining polite, informative, and contextually appropriate language.

- Supervised Learning Anchors: Reinforcing responses that align with human oversight and values.

By embedding these rules directly into the training process, Claude 4.5 Opus can respond to unexpected queries while adhering to ethical limits. For example, if a user asks a potentially dangerous question, the AI is programmed to refuse or redirect in a safe, informative way. This level of guidance ensures that the AI behaves predictably, even in ambiguous scenarios.

Moreover, the soul document allows Claude 4.5 Opus to balance creativity and safety. While it can generate imaginative content, it is constrained by ethical “bright lines,” reducing the risk of generating harmful, biased, or offensive content. This framework represents a significant advancement in AI design, where safety and utility coexist without compromising performance.

Expert Reactions and Implications

The discovery of Claude 4.5 Opus’ soul document has sparked discussion across AI research communities and social media platforms. Amanda Askell, a philosopher and member of Anthropic’s technical staff, confirmed that the AI’s reproduced text is “based on a real document” used in supervised training. She emphasized that while the AI may not reproduce the document perfectly every time, the outputs largely reflect the underlying guidelines.

Experts highlight several positive implications:

- Transparency: Public knowledge of AI guidelines can help researchers and regulators understand model behavior better.

- Trust: Revealing safety and ethical rules can build confidence in AI systems among users and policymakers.

- Accountability: Understanding internal guidelines allows for independent auditing of AI outputs and decision-making processes.

At the same time, some caution that exposing internal AI instructions could pose risks. Malicious actors might attempt to manipulate the AI by exploiting known safety rules. Therefore, balancing transparency with operational security is a key challenge for AI developers.

Challenges and Risks of Transparency

While revealing the soul document offers significant benefits, it also presents potential challenges:

- Intellectual Property: Internal behavioral frameworks are often proprietary; exposing them could erode competitive advantage.

- Exploitation Risk: Malicious users could craft prompts to bypass AI safety limits.

- Misinterpretation: External observers might misunderstand AI decision-making if they view the document out of context.

Despite these risks, partial disclosure or carefully controlled transparency initiatives can enhance public trust and inform policy debates. Researchers argue that a balance between openness and security will become increasingly important as AI systems become more capable and widespread.

The Future of AI Ethics and Internal Guidelines

The Claude 4.5 Opus soul document represents a glimpse into how AI developers embed ethical behavior, safety, and human-aligned goals into machine intelligence. Looking forward, several trends are likely:

- Standardization: AI ethics and safety guidelines may become standardized across the industry, allowing for better auditing and benchmarking.

- Dynamic Learning: AI systems may continuously update their internal ethical rules based on new research and user feedback.

- Regulatory Oversight: Policymakers may require companies to document and, in some cases, disclose AI ethical frameworks for accountability.

- Enhanced User Control: Users may gain visibility into how AI systems make decisions, increasing trust and adoption.

Claude 4.5 Opus’ soul document sets a precedent for ethical transparency, showing that AI systems can be designed to operate safely without sacrificing capability. As AI integrates further into society, such frameworks will be critical for responsible deployment.

Conclusion: What This Means for AI Development

The unexpected revelation of the Claude 4.5 Opus soul document provides a unique window into the ethical and operational rules guiding AI behavior. By outlining safety protocols, tone, and ethical boundaries, the document ensures that Claude 4.5 Opus can operate predictably and responsibly, even in complex scenarios.

For AI researchers, developers, and regulators, this transparency provides an opportunity to evaluate and improve AI safety frameworks. For users, it demonstrates that modern AI systems are designed with ethical principles and real-world safety in mind.

As AI continues to advance, internal guidelines like the soul document will play a crucial role in maintaining trust, accountability, and human-aligned behavior, paving the way for responsible AI integration in everyday life.

For continued updates on India’s fintech and digital finance developments, visit our Tech News section and The News Update.

Related Reads

By The News Update — Updated Dec 3, 2025